High-quality 3D scenes synthesized by SceneWiz3D. Prompts: (1) a bedroom, with large windows revealing sunset outside, Ukiyo-e style; (2) a washing room, realistic detailed photo; (3) an astronaut in the mysterious space.

Abstract

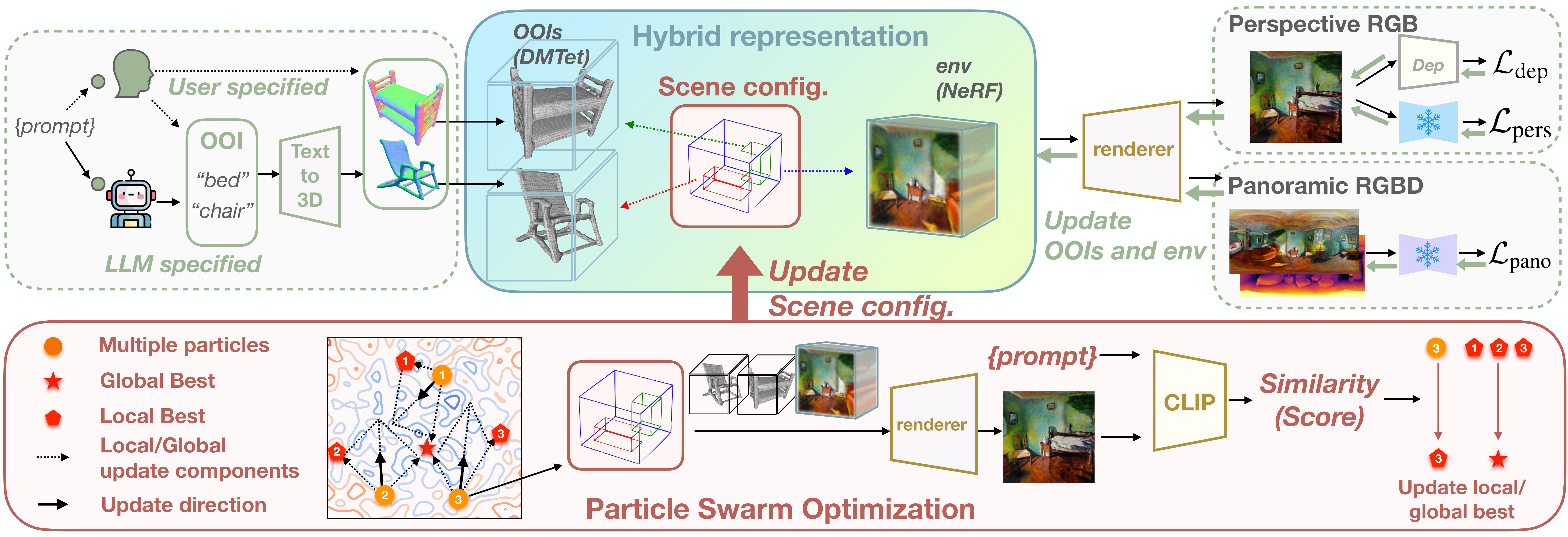

We introduce SceneWiz3D –- a novel approach to synthesize high-fidelity 3D scenes from text. We marry the locality of objects with globality of scenes by introducing a hybrid 3D representation – explicit for objects and implicit for scenes. Remarkably, an object, being represented explicitly, can be either generated from text using conventional text-to-3D approaches, or provided by users. To configure the layout of the scene and automatically place objects, we apply the Particle Swarm Optimization technique during the optimization process. Furthermore, in the text-to-scene scenario, it is difficult for certain parts of the scene (e.g., corners, occlusion) to receive multi-view supervision, leading to inferior geometry. To mitigate the lack of such supervision, we incorporate an RGBD panorama diffusion model as additional prior, resulting in high-quality geometry. Extensive evaluation supports that our approach achieves superior quality over previous approaches, enabling the generation of detailed and view-consistent 3D scenes:

Synthesized 3D Scenes

Overview

Our goal is to create high-fidelity 3D scenes from text. We propose a hybrid scene representation where objects of Interest (OOIs) are modeled by Deep Marching Tetrahedra. The remaining parts of the scene is modeled by Neural Radiance Field (NeRF).

As we disentangle OOIs from the rest of the scene in our hybrid representation, we need to determine the configuration for each object, including coordinates, scaling factor, and rotation degree. We find that it is nontrivial to adopt gradient descent to update the low-dimensional config, but instead propose to use Particle Swarm Optimization (PSO) to automatically configure the scene's layout. Different from prior works that only employ perspectivew view distillation, we also incorporate LDM3D, a diffusion model finetuned on panoramic images in RGBD space to provide additional guidance. During the optimization process, LDM3D provides additional prior information: The RGBD knowledge yield supervision in depth, while panoramic knowledge mitigates the issues of limited views with perspective images and disambiguates the global structure of a scene.

Illustration of SceneWiz3D's pipeline

Artistic scenes

What will you see upon awakening from a deep dream?

Hover the mouse over the image area to see the text prompt!

Diverse scene types

Manipulating the scenes

The dynamic manipulation of objects will happen within one second in the video. Stay tuned!

Comparison with baselines

| A bedroom with large windows revealing sunset outside | Washing room, realistic detailed photo |

A bedroom by Pablo Picasso | |

|---|---|---|---|

| DreamFusion | |||

| ProlificDreamer | |||

| Text2room | |||

| LDM3D | |||

| SceneWiz3D |

Citation

@inproceedings{zhang2023scenewiz3d,

author = {Qihang Zhang and Chaoyang Wang and Aliaksandr Siarohin and Peiye Zhuang and Yinghao Xu and Ceyuan Yang and Dahua Lin and Bo Dai and Bolei Zhou and Sergey Tulyakov and Hsin-Ying Lee},

title = {{SceneWiz3D}: Towards Text-guided {3D} Scene Composition},

booktitle = {arXiv},

year = {2023}

}Acknowledgments

We thank Songfang Han for the assistant in setting up SDFusion. We thank Aniruddha Mahapatra for fruitful discussions and comments about this work.

We borrow the source code of this website from GeNVS.